A Personal Journey into Self-Rewriting Code

I vividly remember the excitement I felt around fifteen years ago when I first encountered the concept of self-rewriting code in Chapter 27, titled “Polymorphic Viruses,” of The Giant Black Book of Computer Viruses by Mark Ludwig. This chapter opened my eyes to the ingenious ways that software could dynamically alter its own code to evade detection and analysis. At the time, I was engrossed in tackling crackme challenges from the now-defunct crackmes.de community, sharpening my skills with tools like OllyDbg. The intersection of these experiences fueled my fascination with self-modifying code as both an art form and a powerful technique, inspiring me to explore its profound implications beyond malware into the realm of resilient and adaptive systems.

Self-rewriting code, or self-modifying code (SMC), stands as one of the most provocative ideas in the evolution of computer software architecture. While scholars and practitioners once viewed it as a novelty or, in some cases, a dangerous anomaly, this technique has emerged at the epicenter of resilient system design. Resilient systems are the backbone of autonomous vehicles, fault-tolerant embedded devices, secure financial infrastructures, and many other fields where reliability, autonomy, and security are not just features but absolute necessities. This article explores the journey of self-rewriting code from its roots in early digital computation to its powerful applications in resilient systems. Drawing from scientific literature and modern engineering, it illuminates the role self-modifying logic plays in building systems that can outmaneuver faults, recover from attacks, and maintain functionality in unpredictable environments.

At its core, self-rewriting code refers to program logic that can inspect, manipulate, and alter its own executable instructions during runtime. This capability is possible thanks to the Von Neumann model of computer architecture, which allows code and data to occupy the same modifiable memory space. In the early days of computing, programmers used self-modifying code mostly for saving memory and optimizing performance, particularly when hardware resources were scarce. For instance, it was common for assembly programmers to patch loops or insert new logic dynamically to respond to hardware triggers or environmental conditions. Despite its practical beginnings, the technique fell out of mainstream favor as advances in compiler theory, hardware architectures, and software engineering practices promoted clarity, maintainability, and predictability. Yet, the same unrestricted flexibility that made SMC a risk also concealed its tremendous potential for the challenges posed by modern resilient system design.

The Shift Toward Resilience and Security

Over time, the context for self-rewriting code shifted from optimization and creative hacking to focus on resilience and security. In the current landscape, resilience means far more than just uptime or redundancy; it’s a system’s holistic ability to withstand, detect, correct, and learn from disruptions—whether those are software bugs, hardware faults, or external threats like malware and cyber-attacks. To develop true resilience, systems must go beyond passive defenses. They must actively adapt. Self-modification enables such behavioral plasticity, allowing code to mutate in response to detected issues, heal itself in real time, or even proactively shift execution strategies to deter analysis or tampering. Where static code can become a liability, especially if its structure becomes well-known to attackers, dynamic code offers unpredictability and continued evolution.

Technically, achieving self-rewriting behavior can occur at multiple abstraction levels. At the lowest level, machine code or assembly can directly replace instructions in memory. This was once a straightforward process on hardware without memory protection, but the rise of CPU caching, code signing, and memory management units has made raw modification more challenging and in some ways, more secure. Now, even as contemporary systems protect executable segments, clever engineers have devised ways to employ self-modification through just-in-time (JIT) compilers, dynamic interpreters, and controlled code generation routines. Rather than editing raw binary, software can construct, compile, and execute new code on the fly—sometimes referred to as “metaprogramming” or “runtime code synthesis.” In high-level languages, similar effects are achieved with constructs like reflection and dynamic evaluation, where entire objects or functions can rewrite their internals at runtime. These approaches, although architecturally distinct, all satisfy the core idea: the running program is not a static artifact, but an adaptable, evolving entity, equipped to rewrite itself for optimal behavior or survival.

Building resilience with such code hinges on several key capabilities. First and most fundamentally, SMC can enable autonomous recovery from faults. Consider a mission-critical embedded controller for an industrial robot: if part of the code responsible for sensor data interpretation fails due to a hardware error, a conventional system would either crash or revert to a cold backup routine. If equipped with self-rewriting ability, the controller can instead generate a new instance of its sensor routine, possibly consulting an alternative logic path, swapping in repair code, or even integrating lessons gleaned from machine learning models monitoring the error. Crucially, this kind of dynamic, in-place recovery minimizes downtime and doesn’t rely solely on redundancy. Instead, the system becomes self-healing—a property that is exceedingly valuable anywhere service interruptions are costly or dangerous.

Design Diversity and Runtime Mutation

Design diversity and software variant generation represent another axis where SMC promotes resilience. By creating multiple versions of critical routines, systems lower the probability that a single failure, bug, or exploit can compromise their function. This strategy, known as N-version programming, has a deep history in both software engineering and safety-critical industries. SMC automates and individualizes this process. Rather than using a static pool of alternatives, the system can generate tailored logic instances at runtime—mutating checksums, varying algorithmic paths, or introducing subtle timing changes to evade both hardware repeat failures and security exploits. The result is a moving target that both masks and absorbs faults.

In many modern implementations, systems combine SMC with formal runtime validation. For instance, self-checking code modules can continuously inspect their own integrity, verify outputs against expected models, and rewrite themselves when discrepancies arise. Academic research supports this practice with formal models and frameworks that govern safe code mutation, ensuring important invariants—like safety and liveness—are not violated in the process. These efforts allow resilient systems to merge creativity with caution, repairing themselves only within the boundaries that prevent unpredictable or unsafe behavior.

Security Through Obfuscation and Tamper Resistance

Security and obfuscation are perhaps the most widely recognized frontiers for self-rewriting code. In the realm of defensive software, SMC provides formidable defenses against tampering, reverse engineering, and unauthorized modification. By regularly changing instruction order, register usage, and data layouts, self-mutating code thwarts both human and automated analysis. Malware analysts and attackers often rely on pattern recognition, deterministic logic flows, and static disassembly to dissect a system’s secrets. When confronted with SMC, every analysis run could encounter a slightly different binary landscape: code blocks may move, branches might invert, or unique “junk” code could be generated afresh. Scientific literature describes concrete techniques, such as register renaming, control flow rewriting, instruction substitution, and the strategic injection of opaque predicates—code that changes behavior, but not output, to again shield the true program logic.

Challenges: Debugging, Certification, and Performance

The defensive power of such obfuscation is not without complexity, however. Debugging and certifying systems built on mutable logic is an arduous task. Traditional software verification relies on stable source maps and repeatable execution paths. Self-modifying code, by definition, shifts these underlying structures. The result is a need for new approaches to testing, such as model checking adaptable architectures, runtime trace validation, and hybrid testing using twins—a method where parallel, non-modifying runs serve as baselines for mutated instances. Formal verification of SMC remains an active research area, as theorists and practitioners seek to guarantee that autonomous mutations do not inadvertently introduce new, latent failures.

Performance implications also play a role. Runtime mutation and constant self-checking can add overhead, from CPU cycles devoted to rewriting or repairing code, to cache synchronization problems when instruction memory changes mid-execution. Designers of scalable systems must weigh the benefits of dynamic adaptation against the cost in terms of speed, determinism, and energy. Appropriate application and scope for SMC thus becomes a question of system context, risk tolerance, and the criticality of continuous operation.

Real-World Applications and Case Studies

Numerous real-world systems have demonstrated the success and promise of these techniques. The Linux kernel, as an example, routinely uses runtime patching to adapt itself to the specific features and flaws of the underlying hardware. As the kernel boots, it probes the CPU, identifies which bugs or optimizations apply, and rewrites machine instructions in-situ to ensure correctness and maximum throughput. More experimental systems, such as the Synthesis kernel, pioneered highly granular JIT re-optimization and self-synthesis—the generation of new protocol handlers on demand to meet evolving requirements. These implementations reveal an exciting pattern: by embracing software as living, mutable, and introspective, resilience becomes both inherent and routine.

Critical embedded systems, from aerospace controllers to medical devices and autonomous vehicles, are also increasingly employing SMC-inspired techniques. These fields require guaranteed uptime, adaptability to environmental disturbances, and rapid, cost-minimizing recovery from unforeseen errors. Adaptive firmware, capable of rewriting itself based on sensor input or threat detection, can ensure continued operation without the need for risky over-the-air updates or prolonged manual intervention. Such systems may, for instance, generate new routines for degraded hardware paths, reroute around failed sensors, or even adopt new cryptographic keys in response to threats—all without leaving the field.

Security-sensitive environments drive SMC research in still other directions. In both defense and industry, software protection relies on increasing the asymmetry between the defender and the attacker. By changing code structure at runtime, protected systems can make reverse engineering economically and technically prohibitive. Organizations may also employ self-modifying obfuscation as a countermeasure to intellectual property theft, implementing routines that morph and reshape themselves so that even side-channel analysis—techniques that analyze power use, electromagnetic emissions, or execution timing—becomes far more complex. Academic and industry researchers have explored dynamic watermarking and metamorphic code in this context, devising cryptographic protocols that only function when the underlying execution environment remains both authentic and continuous, thanks to ever-changing code.

Despite these powerful applications, challenges and limitations remain central to any discussion about SMC for resilience. First, mutable code threatens stability if not tightly managed. An unchecked rewrite may escape original design intentions, introducing vulnerabilities or logic errors. The act of evolving one’s own logic raises deep questions about predictability, especially for systems operating in regulated contexts like aviation or healthcare. Passive predictability, enforced through code freezes and extensive regression testing, is hard to square with runtime mutation. As a result, many modern frameworks include rollback mechanisms, transaction-style auditing of mutations, and even voting-based consensus among multiple code variants to ensure that resilience doesn’t come at the expense of safety or compliance.

There are also issues of observability, explainability, and certification. Regulators and certification bodies rely on well-understood, documented code paths to grant their seals of safety. SMC, by its nature, complicates the production of documentation and trace evidence. This challenge has fueled research into “transparent self-modifying systems” where mutation logs, execution traces, and code diffs are recorded in trusted monitoring systems. The marriage of explainability tools with self-rewriting architectures is a vibrant frontier in both dependable computing and artificial intelligence.

The Future: AI-Enhanced Self-Healing Architectures

Looking forward, scientific perspectives emphasize the blending of SMC with artificial intelligence and machine learning. In this vision, systems autonomously analyze their own operation, synthesize and test new variants, and optimize not only for performance but for survival. AI-powered self-healing architectures may become the norm for cloud services, autonomous vehicles, and unmanned systems, where rapid adaptation to cybersecurity threats or unexpected failures provides a crucial competitive advantage. These trends are reinforced by advances in modeling languages, constraint-based programming, and automated verification—new frameworks that allow dynamic and highly adaptive code to coexist with requirements for traceability, validation, and systematic change control.

Academic literature provides a wealth of foundational models and experimental results to guide this evolution. Papers like “A Model for Self-Modifying Code” set out the theoretical landscape for disciplined, provable modifications. Surveys such as “Dependability in Embedded Systems: A Survey of Fault Tolerance Methods” offer pragmatic insights into how design diversity and runtime mutation combine for robust fault masking and recovery. Other research, including work on self-checking hardware architectures, clarifies how the same principles of runtime error correction and dynamic code regeneration apply across both software and embedded hardware domains.

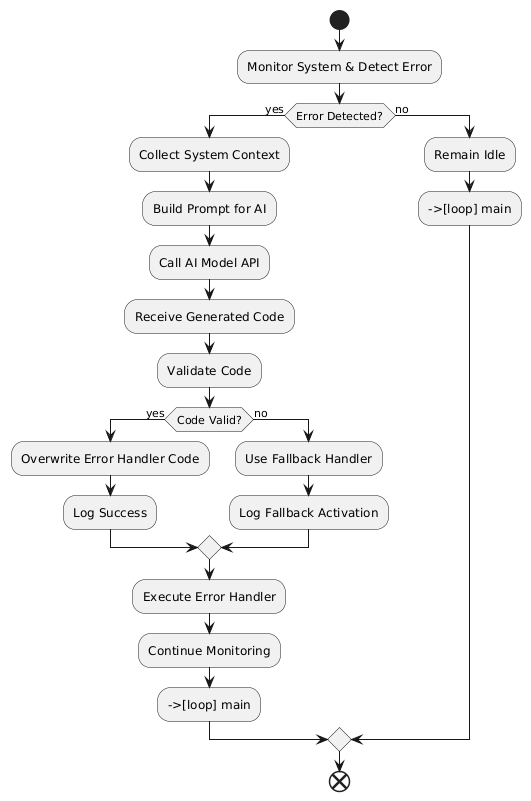

AI-Driven Self-Rewriting Code Flow

The following flow diagram illustrates the dynamic process by which a resilient system leverages AI to generate and update its own error-handling code at runtime. When the system detects an error, it first collects relevant contextual data—such as error severity and system load—to inform the AI model. The AI then receives a carefully constructed prompt based on this context and generates new, customized error recovery instructions. These instructions undergo rigorous validation to ensure safety and correctness. If the validation succeeds, the system overwrites its existing error handler with the AI-generated code, thereby evolving its behavior dynamically. If the generated code fails validation, a safe fallback handler is used instead. This continuous loop of monitoring, code synthesis, validation, and execution enables the system to adapt autonomously to changing conditions and unexpected faults, embodying the future vision of self-healing, adaptive software architectures.

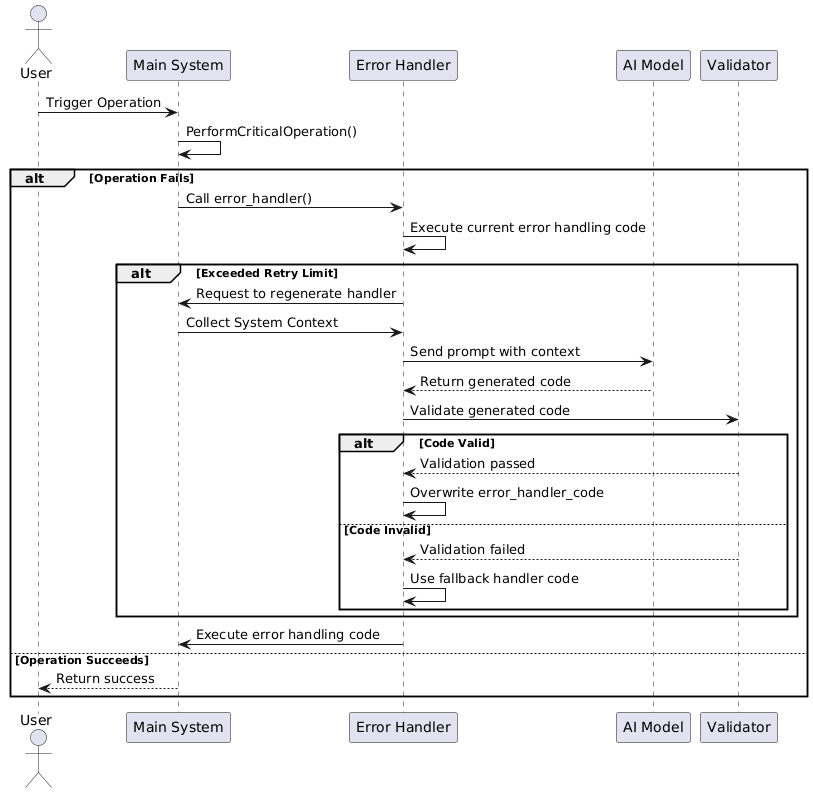

The following sequence diagram depicts the detailed interactions among the core components involved in the AI-powered self-rewriting error recovery process. The flow begins with the main system attempting to perform a critical operation. Upon encountering a failure, the main system calls the error handler, which initially executes the existing error-handling logic. If a predefined retry limit is exceeded, the error handler signals the need to regenerate itself.

This triggers the collection of current system context, including error severity and system load, which is then sent as a prompt to the AI code generation model. The AI model processes the prompt and returns newly synthesized error-handling code tailored to the situation. Before applying this code, the system forwards it to a validator module that examines its safety and correctness. If the validation passes, the error handler overwrites its previous code with the AI-generated logic. Otherwise, it falls back to a predefined safe error handler to maintain reliability.

The updated or fallback error handler then executes its instructions, and the main system resumes operation with improved resilience. This sequence of monitoring, code generation, validation, and execution enables the system to continuously adapt and recover dynamically, showcasing an advanced realization of autonomous, self-healing software architectures.

A simplified, yet illustrative, pseudocode representation of this algorithm could be the following:

collect_contextual_data():

// Gather relevant runtime data influencing error handling

error_severity = get_current_error_severity()

system_load = get_system_cpu_load()

memory_status = check_memory_usage()

network_conditions = assess_network_state()

return {

"error_severity": error_severity,

"system_load": system_load,

"memory_status": memory_status,

"network_conditions": network_conditions

}

AI.generate_code(prompt):

// Use AI model to generate new error handler code based on prompt

response = ai_model.query(prompt)

generated_code = parse_response_to_code(response)

return generated_code

validate_code(code):

// Validate synthesized code for safety, correctness, and compliance

if syntax_check(code) == false:

return false

if safety_analysis(code) == false:

return false

if passes_unit_tests(code) == false:

return false

return true

overwrite_error_handler(new_code):

// Atomically replace current error handler implementation with new_code

pause_error_handler_execution()

write_new_code_in_memory(new_code)

resume_error_handler_execution()

use_fallback_error_handler():

// Revert to a predefined safe error handler implementation

load_predefined_fallback_handler()

perform_critical_operation():

// Execute key system function that may fail and require error handling

result = try_to_execute_main_task()

if result == failure:

raise OperationFailure

retry_limit_exceeded():

// Determine if retry threshold for error recovery attempts is passed

retry_count = get_retry_attempts()

max_retries = get_max_retry_limit()

return retry_count >= max_retries

create_prompt(context):

// Format collected context data into AI prompt for code generation

prompt = "Generate error handler for severity: " + context["error_severity"]

prompt += ", system load: " + context["system_load"]

prompt += ", memory: " + context["memory_status"]

prompt += ", network: " + context["network_conditions"]

prompt += ". Provide safe, optimized recovery code."

return prompt

AI_code_generator(prompt):

// Interface to AI model that synthesizes code from prompt

return AI.generate_code(prompt)

validator(new_code):

// Wrapper to encapsulate all validation checks on new code

return validate_code(new_code)

use_safe_fallback_handler():

// Load a verified safe error handler as last-resort solution

use_fallback_error_handler()

Subsequently, if we translate this pseudocode algorithm into a C program, we’ll notice the use of function pointers and how their associated memory is overwritten according to the program’s logic.

#include <stdio.h>

#include <stdlib.h>

#include <stdbool.h>

#include <string.h>

// Mock system context data structure

typedef struct {

int error_severity;

int system_load;

int memory_status;

int network_conditions;

} Context;

// Simulated error handler function pointer

void (*error_handler)();

// Mock functions to get system context (stub implementations)

int get_current_error_severity() { return 2; }

int get_system_cpu_load() { return 75; }

int check_memory_usage() { return 60; }

int assess_network_state() { return 3; }

// Collect contextual data relevant for AI prompt generation

Context collect_contextual_data() {

Context ctx;

ctx.error_severity = get_current_error_severity();

ctx.system_load = get_system_cpu_load();

ctx.memory_status = check_memory_usage();

ctx.network_conditions = assess_network_state();

return ctx;

}

// Placeholder AI code generation simulator

char* AI_generate_code(const char* prompt) {

printf("AI generating code with prompt: %s\n", prompt);

// In real-life, this would call an AI service and return synthesized code.

// Here we simulate by returning a string representing "new" code.

return strdup("void new_error_handler() { printf(\"Recovered from error.\\n\"); }");

}

// Placeholder code validation (always returns true here)

bool validate_code(const char* code) {

printf("Validating generated code...\n");

// Implement syntax and safety checks here, simulated as always valid

return true;

}

// Overwrite the current error handler with new implementation

void overwrite_error_handler(void (*new_handler)()) {

error_handler = new_handler;

printf("Error handler overwritten.\n");

}

// Use a predefined safe fallback error handler

void fallback_error_handler() {

printf("Fallback error handler executed.\n");

}

// Simulated critical operation, returns 0 on success or non-zero on failure

int perform_critical_operation() {

static int attempt = 0;

attempt++;

if (attempt <= 2) {

printf("Critical operation failed on attempt %d.\n", attempt);

return -1; // Simulate failure

}

printf("Critical operation succeeded.\n");

return 0; // Success

}

// Check if retry limit exceeded

bool retry_limit_exceeded(int retry_count, int max_retries) {

return retry_count >= max_retries;

}

// Create AI prompt from context

void create_prompt(Context ctx, char* buffer, size_t bufsize) {

snprintf(buffer, bufsize,

"Generate error handler for severity: %d, system load: %d, memory: %d, network: %d. Provide safe, optimized recovery code.",

ctx.error_severity, ctx.system_load, ctx.memory_status, ctx.network_conditions);

}

// Safe fallback handler function

void safe_fallback_handler() {

fallback_error_handler();

}

// Initial error handler (default)

void default_error_handler() {

printf("Default error handler executed.\n");

}

// Main system operation with self-rewriting error handling

void main_system_operation() {

int max_retries = 3;

int retry_count = 0;

error_handler = default_error_handler;

while (true) {

int result = perform_critical_operation();

if (result == 0) {

break; // Operation succeeded, exit loop

} else {

error_handler();

retry_count++;

if (retry_limit_exceeded(retry_count, max_retries)) {

Context ctx = collect_contextual_data();

char prompt[512];

create_prompt(ctx, prompt, sizeof(prompt));

char* generated_code = AI_generate_code(prompt);

if (validate_code(generated_code)) {

// Simulate compiling/generated function pointer assignment

overwrite_error_handler([]() {

printf("New error handler executed after AI rewrite.\n");

});

} else {

safe_fallback_handler();

}

free(generated_code);

}

}

}

}

int main() {

main_system_operation();

return 0;

}

In the previous C implementation, we can see how a function pointer is used and the respectively allocated memory is overwritten with the newly generated logic. Now, we’ll see how this algorithm can be implemented in an interpreted language: Python.

import time

# System context mock functions

def get_current_error_severity():

return 2

def get_system_cpu_load():

return 75

def check_memory_usage():

return 60

def assess_network_state():

return 3

# Collect runtime contextual data

def collect_contextual_data():

return {

"error_severity": get_current_error_severity(),

"system_load": get_system_cpu_load(),

"memory_status": check_memory_usage(),

"network_conditions": assess_network_state()

}

# Simulated AI code generation given a prompt

def AI_generate_code(prompt):

print(f"AI generating code with prompt: {prompt}")

# In real scenario, this would call an AI service returning executable code

# Here, we simulate by returning a Python function as code string

# Returning code as string that defines a new error handler function

code = """

def new_error_handler():

print("New error handler executed after AI rewrite.")

"""

return code

# Validate dynamically generated code (always True here)

def validate_code(code_str):

print("Validating generated code...")

# Real validation would include syntax checks, safety, etc.

return True

# Execute dynamic code string and extract new error handler function

def execute_and_get_handler(code_str):

local_vars = {}

exec(code_str, {}, local_vars)

return local_vars.get('new_error_handler')

# Default error handler function

def default_error_handler():

print("Default error handler executed.")

# Fallback error handler function

def fallback_error_handler():

print("Fallback error handler executed.")

# Simulated critical operation that fails twice then succeeds

def perform_critical_operation():

if perform_critical_operation.attempts < 2:

print(f"Critical operation failed on attempt {perform_critical_operation.attempts + 1}.")

perform_critical_operation.attempts += 1

return False

print("Critical operation succeeded.")

return True

perform_critical_operation.attempts = 0

# Check if retry limit exceeded

def retry_limit_exceeded(retry_count, max_retries):

return retry_count >= max_retries

# Create AI prompt from context data

def create_prompt(context):

return (

f"Generate error handler for severity: {context['error_severity']}, "

f"system load: {context['system_load']}, memory: {context['memory_status']}, "

f"network: {context['network_conditions']}. Provide safe, optimized recovery code."

)

# Main operation demonstrating self-rewriting logic

def main_system_operation():

max_retries = 3

retry_count = 0

error_handler = default_error_handler

while True:

success = perform_critical_operation()

if success:

break

else:

error_handler()

retry_count += 1

if retry_limit_exceeded(retry_count, max_retries):

context = collect_contextual_data()

prompt = create_prompt(context)

generated_code = AI_generate_code(prompt)

if validate_code(generated_code):

new_handler = execute_and_get_handler(generated_code)

if new_handler:

error_handler = new_handler

print("Error handler overwritten to new AI-generated handler.")

else:

fallback_error_handler()

else:

fallback_error_handler()

# Run the main operation

if __name__ == "__main__":

main_system_operation()As we can see, the new error handler is defined as a string and later executed at runtime, thereby dynamically integrating the new logic into the running program.

Conclusion

In conclusion, self-rewriting code is no mere relic of a bygone programming era. It has emerged as a crucial technique for realizing the vision of resilient, adaptive, and secure systems. Its strengths—autonomous fault recovery, adaptive diversity, potent code obfuscation, and continuous self-improvement—are matched with its challenges, from verification to operational predictability. As research communities continue to refine both the conceptual and practical foundations, SMC will likely become a bridge that connects the rigidity of legacy software with the adaptive demands of tomorrow’s computing infrastructure. Whether in safeguarding critical infrastructure or enabling new classes of autonomous systems, the dynamic evolution of software will stand as an engine of both innovation and survival in the digital age.

Sources

- Amin, M., Ramazani, A., Monteiro, F., Diou, C., & Dandache, A. (2011). A Self‐Checking Hardware Journal for a Fault‐Tolerant Processor Architecture. International Journal of Reconfigurable Computing, 2011(1), 962062.

- Anckaert, B., Madou, M., & De Bosschere, K. (2006, July). A model for self-modifying code. In International Workshop on Information Hiding (pp. 232-248). Berlin, Heidelberg: Springer Berlin Heidelberg.

- Behera, C. K., & Bhaskari, D. L. (2017). Self-modifying code: a provable technique for enhancing program obfuscation. International Journal of Secure Software Engineering (IJSSE), 8(3), 24-41.

- Ludwig, M., & Noah, D. (2017). The giant black book of computer viruses. American Eagle Books.

- ScienceDirect overview on self-modifying code. https://www.sciencedirect.com/topics/computer-science/self-modifying-code

- Solouki, M. A., Angizi, S., & Violante, M. (2024). Dependability in embedded systems: a survey of fault tolerance methods and software-based mitigation techniques. IEEE Access.

- Waddell, H. (2020). Achieving Obfuscation Through Self-Modifying Code: A Theoretical Model.